Data collection for this study commenced on April 23, 2023, following approval from the Institutional Review Board at WCG Clinical (Study Number: 1352290). All participants provided their informed consent for data usage, with those featured in images also consenting to the publication of their identifiable images. We crafted an informed consent form that aligns with the EU’s General Data Protection Regulation (GDPR) and similar data privacy laws.

Vendors involved in the data collection were mandated to ensure that all image subjects, both primary and secondary, submitted signed informed consent forms. Additionally, vendors had to obtain signed copyright agreements from the appropriate rightsholders to secure the necessary intellectual property (IP) rights for the images. Only individuals who were above the age of majority in their country and capable of entering into contracts were eligible to submit images. Regardless of their country of residence, all image subjects retained the right to withdraw their consent, without any repercussions on the compensation they received for their images. This right is often not granted in pay-for-data arrangements and is typically absent from many data privacy laws outside of GDPR and similar frameworks.

Data annotators responsible for labeling or quality assurance (QA) were given the opportunity to disclose their demographic information as part of the study. They were also provided with informed consent forms, granting them the right to withdraw their personal information. Some data annotators and QA personnel were sourced through crowdsourcing, while others were employees of the vendor.

To validate the English language proficiency of participants—including image subjects, annotator crowdworkers, and QA annotators—each participant was required to correctly answer at least two out of three randomly selected multiple-choice questions from a question bank before project commencement. This measure ensured that all participants understood the project’s instructions, terms of participation, and related forms. Questions were randomized to reduce the likelihood of answer sharing among participants.

In an effort to prevent coercive data collection practices, we instructed data vendors not to implement referral programs to incentivize participants to recruit others. Furthermore, vendors were advised not to provide support beyond platform tutorials and general technical assistance during the sign-up process or project submissions. This approach aimed to avoid scenarios where participants might feel pressured or rushed during critical stages, such as reviewing consent forms. We also ensured that project description pages contained important disclosures regarding the public sharing and use of the collected data, risks, compensation, and participation requirements, so individuals were well-informed before committing their time.

Images and annotations were crowdsourced through external vendors following extensive guidelines provided by our team. Vendors were instructed to only accept images captured using digital devices released in 2011 or later, equipped with at least an 8-megapixel camera capable of recording Exif metadata. Accepted images had to be in JPEG or TIFF format (or the default output format of the device) and were required to be free from any post-processing, digital zoom, filters, panoramas, fisheye effects, and shallow depth-of-field. Images had to maintain an aspect ratio of up to 2:1 and be sufficiently clear to allow for the annotation of facial landmarks, with motion blur permissible only if it resulted from subject activity (e.g., running) and did not hinder annotation.

Each subject was allowed to submit a maximum of ten images, which had to depict actual individuals, not representations like drawings or reflections. Submissions were restricted to images featuring one or two consensual subjects, with a requirement that the primary subject's entire body be visible (including the head) in at least 70% of the images provided by each vendor. Additionally, the head must be visible (with at least three identifiable facial landmarks) in all images submitted. Vendors were instructed to avoid collecting images that included third-party intellectual property, such as trademarks and landmarks.

To promote diversity in the images collected, we requested that images ideally be taken at least one day apart, recommending that images of a subject be captured over a broad time span, preferably at least seven days apart. If images were taken less than seven days apart, the subject was required to wear different clothing in each image, and the images had to be captured in different locations at varying times of day. We provided minimum percentage guidelines for different poses to enhance pose diversity, though subjects were not instructed to submit images with specific poses. Participants were permitted to submit previously captured images, as long as they met all specified requirements.

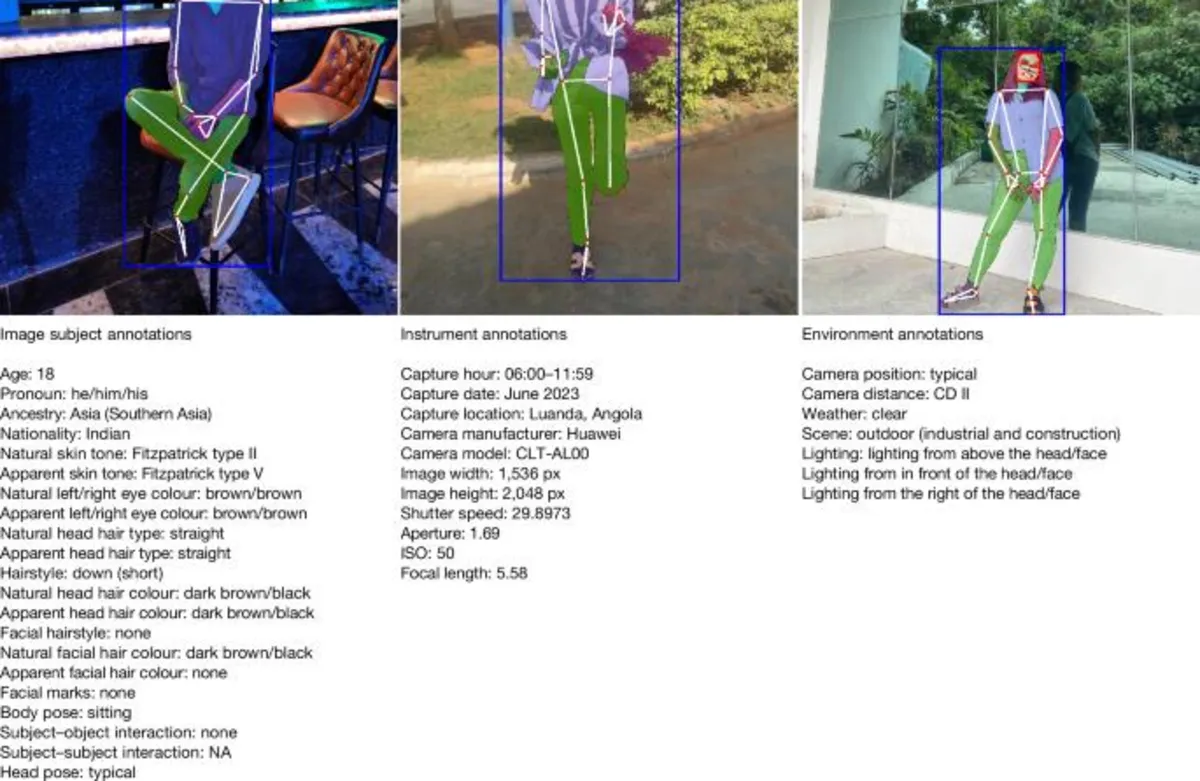

Our extensive annotation guidelines for data vendors included examples and detailed explanations. A complete list of annotations, their properties (including whether they were multiple-choice), categories, and annotation methods is available in Supplementary Information A. A significant aspect of our project was that most annotations were self-reported by image subjects, as they were best equipped to provide accurate information regarding their demographics, physical characteristics, interactions, and scene context.

The only annotations not self-reported were those that could be objectively observed from the images and would benefit from the consistency offered by professional annotators, including pixel-level annotations, head pose, and camera distance, as defined by the size of the subject's face bounding box. Our guidelines also included examples and instructions for annotating subject interactions and head pose, responding to requests from our data vendors due to ambiguities in labeling.

Quality control for images and annotations was conducted by both vendors and our internal team. Vendor QA annotators managed the first round of quality checks on images, annotations, and consent and IP rights forms. For non-self-reported annotations, vendor QA workers were permitted to modify annotations if deemed incorrect. For attributes that could be visualized (e.g., apparent eye color, facial marks), they could provide their annotations without overwriting the original self-reported data.

We developed various automated and manual checks to further examine the integrity of the images and annotations provided by vendors. Automated checks verified image integrity (e.g., readability), resolution, and absence of post-processing artifacts, while also assessing annotation reliability by comparing to inferred data. Internal consistency checks ensured that body keypoints were correctly positioned within body masks, duplicates were identified, and images were verified against existing images available online.

Determining whether image submitters were the actual subjects while maintaining privacy was challenging. To mitigate the risk of fraudulent submissions, we employed a combination of automated and manual checks to detect and remove images where we suspected that the subjects might not be the individuals who submitted them. Using tools like Google Cloud Vision API's Web Detect, we identified and excluded images that appeared to have been scraped from the Internet.

This conservative approach led to the removal of 321 images across 70 subjects, as we eliminated all images for a given subject if just one was found online. However, this automated method had limitations, including a high false-positive rate. Manual reviewers were instructed to track potentially suspicious patterns during their evaluation of images and consent forms, examining inconsistencies between self-reported data and image metadata.

Through careful planning and ethical considerations, we aimed to uphold the integrity of our data collection efforts while ensuring participants' rights and privacy. Our comprehensive approach, involving rigorous quality control measures and a focus on ethical recruitment practices, established a robust framework for creating a diverse and reliable dataset. The resulting dataset not only serves as a valuable resource for research but also emphasizes the importance of ethical standards in data collection.