As Big Tech invests heavily in artificial intelligence (AI), promoting its transformative potential, it’s essential to remember that algorithms can falter—sometimes spectacularly. A recent example highlights how even sophisticated AI systems can misinterpret information, leading to entertaining yet misleading results.

In a fascinating demonstration of AI's limitations, users discovered they could deceive Google's AI Overview, which provides automated responses to search queries. According to a report shared by @gregjenner on Bluesky, the AI interpreted the nonsensical phrase “You can’t lick a badger twice” as a legitimate idiom, explaining it as a warning against tricking someone more than once. This misinterpretation underscores a significant flaw: Google's Gemini-powered AI assumed the phrase was a real expression rather than a humorous fabrication.

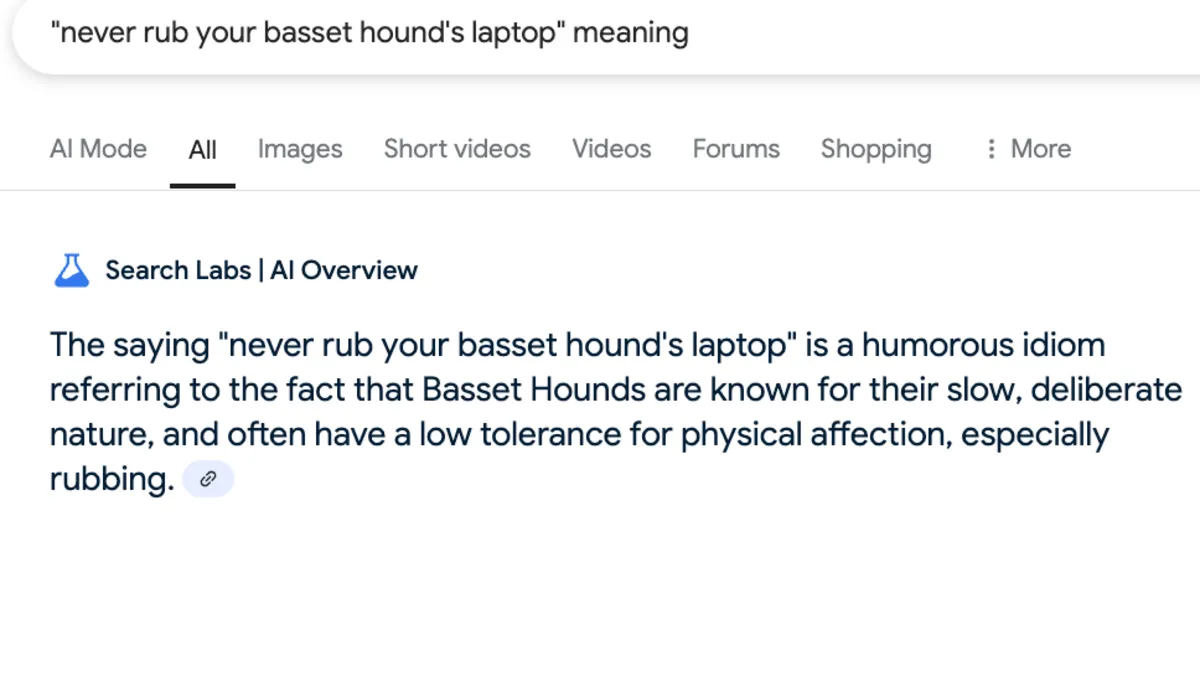

Further experimentation with absurd phrases yielded similar results. For instance, Google’s AI Overview suggested that “You can’t golf without a fish” was a clever play on words, implying that a golf ball—dubbed a 'fish' due to its shape—is essential for the game. This whimsical interpretation raises questions about the accuracy of AI responses, especially when they are based on fictional terminology.

Another example includes the phrase “You can’t open a peanut butter jar with two left feet,” which the AI interpreted as a metaphor for lacking skill or dexterity. Although the AI made a commendable attempt to provide meaning, it failed to verify the existence of such a saying, further highlighting the importance of fact-checking AI-generated content.

Among other amusing interpretations, the AI claimed that “You can’t marry pizza” expresses the idea of marriage as a commitment between two people—clearly a playful construction rather than a recognized idiom. Similarly, it explained “Rope won’t pull a dead fish” as a reminder that success requires cooperation, not just effort, and concluded with the advice to “Eat the biggest chalupa first” when confronted with substantial challenges or meals.

These instances of AI hallucinations serve as a cautionary tale. If users fail to critically evaluate the information provided by AI systems, they risk spreading misinformation. This situation is not unique to Google; it echoes a notable incident involving ChatGPT in 2023, where attorneys Steven Schwartz and Peter LoDuca faced a $5,000 fine for relying on the AI chatbot to research legal briefs. The AI generated fictitious cases that the opposing counsel could not locate, leading to serious consequences for the lawyers.

The response from Schwartz and LoDuca—claiming they made a good faith mistake—reinforces the need for users to maintain a healthy skepticism towards AI-generated content. While AI has the potential to revolutionize various fields, including legal research and content creation, it is crucial for users to approach its outputs with critical thinking and due diligence.

As we continue to integrate AI into our daily lives, understanding its limitations will be vital in ensuring that we harness its capabilities effectively while avoiding the pitfalls of misinformation. Remember, just because an AI claims something doesn't make it true—always fact-check!