In recent advancements within the field of brain-computer interfaces (BCIs), researchers at Stanford University have developed a groundbreaking system capable of decoding inner speech, a significant leap forward in assisting individuals with severe paralysis. Traditional BCIs have primarily focused on capturing signals from the regions of the brain that translate the intention to speak into muscle movements. However, this approach requires patients to physically attempt to speak, which can be exhausting for those suffering from conditions like ALS or tetraplegia.

The innovative BCI developed by the Stanford team circumvents the need for physical speech attempts by decoding what is referred to as inner speech—the silent dialogue we engage in during reading or thinking. This method not only alleviates the physical strain associated with speaking attempts but also raises vital concerns about the privacy of a person's thoughts. To address these concerns, the researchers incorporated a unique safeguard to protect the mental privacy of users.

Historically, most neural prostheses designed for speech have been anchored in decoding attempted speech due to strong signals generated during movement. Benyamin Meschede Abramovich Krasa, a neuroscientist at Stanford and co-lead author of the study, noted that the vocal tract's functionality relies heavily on muscle movement. By recording signals from the motor cortex, the team aimed to bypass the complexities of decoding higher-level language processing, which remains poorly understood.

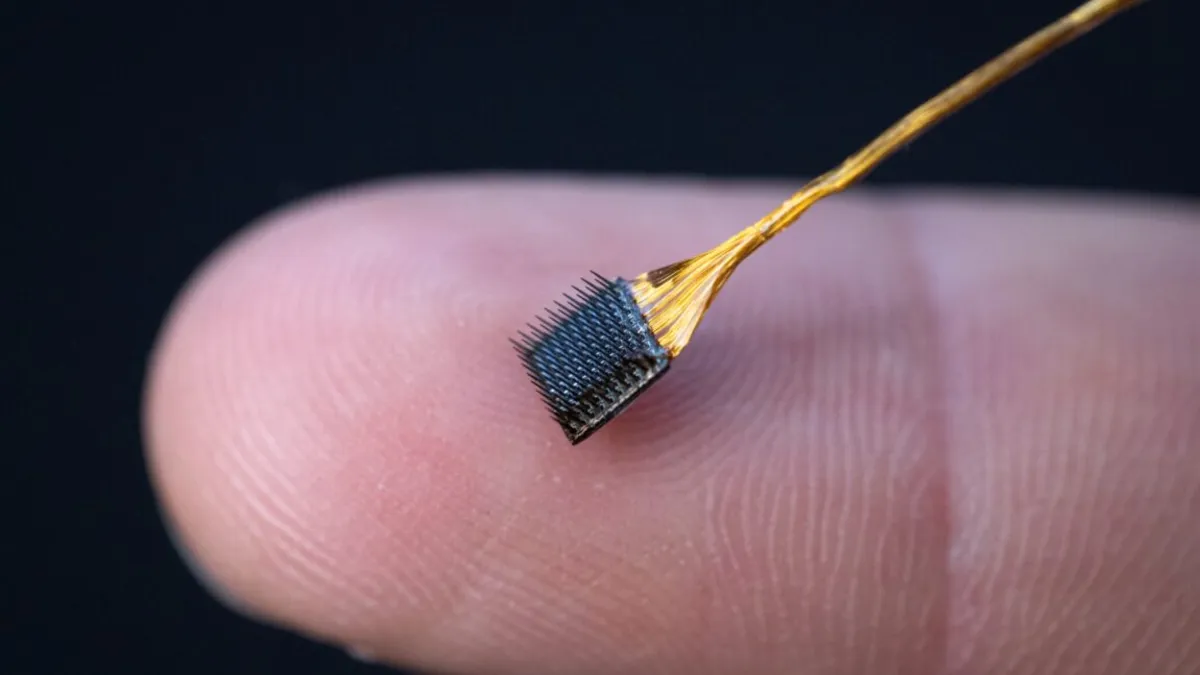

However, since attempting to speak is a considerable effort for individuals with severe motor disabilities, Krasa's team pivoted to focus on silent speech. They initiated a data collection phase to train artificial intelligence algorithms to interpret neural signals associated with inner speech and convert them into comprehensible words. This process involved four participants, each with microelectrode arrays implanted in various regions of the motor cortex, who participated in tasks that included listening to recorded words and engaging in silent reading.

During their research, the team discovered that the signals associated with inner speech were represented in the same brain areas responsible for attempted speech. This finding raised an essential question: could systems trained to decode attempted speech inadvertently capture inner speech? The team conducted tests using their previously developed attempted speech decoding system, which confirmed that it could indeed activate when participants envisioned speaking sentences displayed on a screen.

The prospect of translating thoughts directly into words introduces significant privacy concerns, particularly given our limited understanding of brain function. In June 2025, a similar BCI was showcased at the University of California—Davis, where researchers claimed their system could differentiate between inner and attempted speech. However, Krasa's team recognized the potential for accidental inner speech decoding and developed two safeguards to mitigate this risk.

The first safeguard automatically identified subtle differences between brain signals for attempted and inner speech, allowing for the training of AI decoder neural networks to ignore unintentional inner speech signals. The second safeguard required patients to mentally 'speak' a specific password—“Chitty chitty bang bang”—to activate the prosthesis, achieving a high recognition accuracy of 98%. However, this method struggled with more complex commands.

Once the mental privacy safeguards were in place, the team began testing the inner speech system with cued words. Participants were shown short sentences and asked to imagine saying them, achieving varying levels of accuracy—86% with a limited vocabulary of 50 words, which fell to 74% when expanded to 125,000 words. In unstructured inner speech tests, however, the BCI's performance was less impressive, often yielding results just above chance levels.

When tasked with recalling favorite foods or quotes without explicit cues, the outputs from the decoder were largely nonsensical. Krasa expressed that, while the inner speech neural prosthesis serves as a proof of concept, its current error rates render it impractical for regular use. He suggested that hardware limitations and the precision of signal recording may contribute to these challenges.

Looking ahead, Krasa's team is engaged in two promising projects stemming from their work on the inner speech neural prosthesis. The first aims to evaluate the speed of an inner speech BCI compared to traditional attempted speech alternatives. The second focuses on individuals with aphasia, a condition where motor control exists but the ability to produce words is impaired. The researchers aim to determine whether inner speech decoding could provide meaningful assistance for these individuals.

In summary, the development of this innovative BCI at Stanford University marks a significant step forward in the realm of neuroprosthetics, highlighting both the potential for assisting those with severe speech impairments and the importance of safeguarding mental privacy in the process.