A groundbreaking brain-computer interface (BCI) has enabled a paralyzed man to control a robotic arm merely by imagining the movements. This innovative technology, developed by researchers at UC San Francisco, marks a significant advancement in neuroprosthetics, as it operated reliably for an unprecedented duration of seven months, far exceeding the lifespan of previous BCIs that typically functioned for just a few days.

The key feature of this new AI-enhanced BCI is its ability to adapt to the natural shifts in brain activity, ensuring long-term stability and accuracy. The intelligent system continuously learns from the user’s brain patterns, making it capable of adjusting to daily variations in brain signals. According to Karunesh Ganguly, MD, PhD, a neurologist and professor at UCSF, “This blending of learning between humans and AI is the next phase for these brain-computer interfaces.”

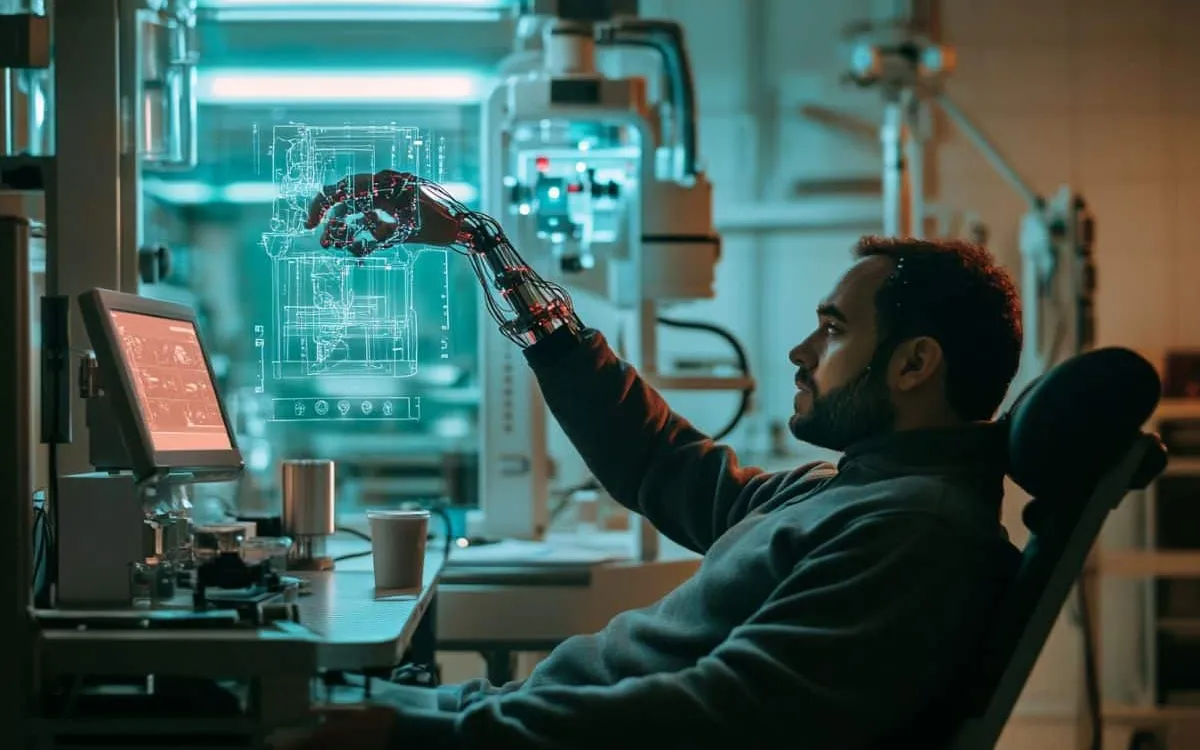

In a remarkable demonstration of the BCI's capabilities, the participant was able to successfully grasp, move, and manipulate real-world objects using the robotic arm. With the training from a virtual arm, he could perform tasks such as picking up blocks and even using a water dispenser. This represents a major milestone in restoring movement for individuals with paralysis.

The research, funded by the National Institutes of Health, was published in the journal Cell on March 6. The study involved a participant who had been paralyzed by a stroke and could neither speak nor move. Tiny sensors implanted in his brain recorded activity as he imagined moving different body parts. The study aimed to determine how brain activity patterns change over time, which is crucial for maintaining the BCI's effectiveness.

Ganguly and his colleague, Nikhilesh Natraj, PhD, trained the participant over two weeks to imagine specific finger and hand movements while the sensors collected data on his brain activity. Although initial attempts to control the robotic arm were imprecise, the participant practiced with a virtual robot arm, receiving feedback that helped refine his control. This effective training allowed him to transition smoothly to handling the real robotic arm.

After months of use, the participant was able to control the robotic arm with just a brief 15-minute “tune-up” to account for changes in his brain's movement representations. Ganguly is currently focused on refining the AI models to enhance the speed and fluidity of the robotic arm's movements, with plans to test the BCI in home settings. For those with paralysis, this technology could fundamentally change lives, enabling them to perform basic tasks like feeding themselves or getting a drink of water.

As researchers continue to develop and optimize this technology, they remain optimistic about the future. Ganguly expressed confidence in their ability to make the system work effectively, stating, “I’m very confident that we’ve learned how to build the system now, and that we can make this work.” This ongoing research holds tremendous promise for the field of neuroprosthetics and the potential to improve the quality of life for individuals with limited mobility.

Other contributors to this study include Sarah Seko and Adelyn Tu-Chan from UCSF, along with Reza Abiri from the University of Rhode Island. The research received support from the National Institutes of Health (1 DP2 HD087955) and the UCSF Weill Institute for Neurosciences.

The development of this long-term, AI-driven brain-computer interface represents a remarkable step forward in assisting those with paralysis. By enabling users to control robotic limbs through thought, this technology paves the way for greater independence and improved quality of life, signifying a new era in brain-computer interface technology.